Using Amazon’s Kubernetes Distribution Everywhere with Amazon EKS Distro

Using Kubernetes on Public Cloud is easy. Especially if you are using Managed Services like Amazon EKS (Elastic Kubernetes Service). EKS is one of the most popular and most used Kubernetes Distribution. When using EKS, you don’t need to manage the Control Plane nodes, etcd nodes or any other control plane components. This simplicity allows you to focus just on your applications. But in real life scenarios, you sometimes need to run Kubernetes clusters in on-premises environments. Maybe because of the regulation restrictions, compliance requirements or you may need the lowest latency when accessing your clusters or applications.

There are so many Kubernetes Distributions out there. Most of them are CNCF certified. But that means you need to choose from many options, try and implement one that suits your needs. You need to check the security parts of the distribution or try to find a suitable tool for deployment. In other words, we all need a standardization.

In December 2020, AWS announced the EKS Distro. EKS Distro is a Kubernetes Distribution based on and used by managed Amazon EKS that allows you to deploy secure and reliable Kubernetes Clusters in any environment. With EKS Distro, you can use the same tooling and the same versions of Kubernetes and its dependencies with EKS. You don’t need to worry about security patching the Distribution too because with every version of the EKS Distro you will get the latest patchings as well and EKS Distro follows the same EKS process to verify Kubernetes versions. That means you are always using a reliable and tested Kubernetes Distribution in your environment.

EKS Distro is an Open Source project that lives on GitHub. You can check out the repo from this link: https://github.com/aws/eks-distro/

You can install EKS Distro on bare-metal servers, Virtual machines in your own Data Centers or even other Public Cloud provider environments as well.

Unlike EKS, when using EKS Distro, you have to manage all the control plane nodes, etcd nodes and the control plane components yourselves. That brings some extra operational burdens but without the need of thinking about security or reliability of the Kubernetes Distro that you are using is a huge benefit.

EKS Deployment Options Comparison Table

As you can see from the screenshot above each EKS Deployment Option has its own features. On the right column there are options and features of EKS Distro. As I’ve mentioned before when using EKS Distro you need to have your own infrastructure and you need to manage the Control Plane. Also you can use different 3rd party CNI Plugins according to your needs. Biggest difference is that unlike EKS Anywhere, there are no Enterprise Support offerings from AWS with EKS Distro.

The project is on GitHub and supported by the Community. When you have any problems or when you want to contribute to the projects you can file an issue or find solutions from the previous issues on the repository.

Let’s see EKS Distro in action!

When installing EKS Distro, you can choose a launch partner’s installation options or you can use familiar community options like kubeadm or or kops.

I will demonstrate the installation of EKS Distro with kubeadm in this blog post.

First of all, for the installation with kubeadm, you need an RPM-based Linux system. I am using a CentOS system for this demonstration.

I have installed Docker 19.03 version, disabled swap and disabled SELinux on the machine.

I will install kubelet, kubectl and kubeadm with the commands below on the machine. I will install the 1.19 version of the Kubernetes in this demonstration.

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

cd /usr/bin

sudo wget https://distro.eks.amazonaws.com/kubernetes-1-19/releases/4/artifacts/kubernetes/v1.19.8/bin/linux/amd64/kubelet; \

sudo wget https://distro.eks.amazonaws.com/kubernetes-1-19/releases/4/artifacts/kubernetes/v1.19.8/bin/linux/amd64/kubeadm; \

sudo wget https://distro.eks.amazonaws.com/kubernetes-1-19/releases/4/artifacts/kubernetes/v1.19.8/bin/linux/amd64/kubectl

sudo chmod +x kubeadm kubectl kubelet

sudo systemctl enable kubelet

After enabling kubelet service, I am adding some arguments for kubeadm.

sudo mkdir /var/lib/kubelet

sudo vi /var/lib/kubelet/kubeadm-flags.env

KUBELET_KUBEADM_ARGS="--cgroup-driver=systemd —network-plugin=cni —pod-infra-container-image=public.ecr.aws/eks-distro/kubernetes/pause:3.2"I will pull the necessary EKS Distro container images and tag them accordingly.

sudo docker pull public.ecr.aws/eks-distro/kubernetes/pause:v1.19.8-eks-1-19-4;\

sudo docker pull public.ecr.aws/eks-distro/coredns/coredns:v1.8.0-eks-1-19-4;\

sudo docker pull public.ecr.aws/eks-distro/etcd-io/etcd:v3.4.14-eks-1-19-4;\

sudo docker tag public.ecr.aws/eks-distro/kubernetes/pause:v1.19.8-eks-1-19-4 public.ecr.aws/eks-distro/kubernetes/pause:3.2;\

sudo docker tag public.ecr.aws/eks-distro/coredns/coredns:v1.8.0-eks-1-19-4 public.ecr.aws/eks-distro/kubernetes/coredns:1.7.0;\

sudo docker tag public.ecr.aws/eks-distro/etcd-io/etcd:v3.4.14-eks-1-19-4 public.ecr.aws/eks-distro/kubernetes/etcd:3.4.13-0

I will add some other configurations as well.

sudo vi /etc/modules-load.d/k8s.conf

br_netfilter

sudo vi /etc/sysctl.d/99-k8s.conf

net.bridge.bridge-nf-call-iptables = 1

Now let’s initialize the cluster!

sudo kubeadm init --image-repository public.ecr.aws/eks-distro/kubernetes --kubernetes-version v1.19.8-eks-1-19-4

This output is mostly the same as the usual kubeadm init command output. As you can see from the screenshot, the output has the kubeadm join command for the worker nodes or the configuration for accessing the cluster with the kubeconfig file. By the way, let me do that and access my Kubernetes cluster installed with EKS Distro.

sudo mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Let’s run kubectl get nodes command and see the output.

As you can see, I am able to connect the cluster and see the kubectl get nodes command output but node is in NotReady status. The reason is I need to add a Pod Network addon and install a CNI Plugin to the cluster. I will use Calico CNI for this demonstration.

sudo curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

After installing the Calico CNI, my master node is now in Ready state.

I have configured the worker nodes with the same prerequisites like installing Docker and disabling swap. I will pull and tag the necessary container image for the Kubernetes cluster as well with these commands.

sudo docker pull public.ecr.aws/eks-distro/kubernetes/pause:v1.19.8-eks-1-19-4;\

sudo docker tag public.ecr.aws/eks-distro/kubernetes/pause:v1.19.8-eks-1-19-4

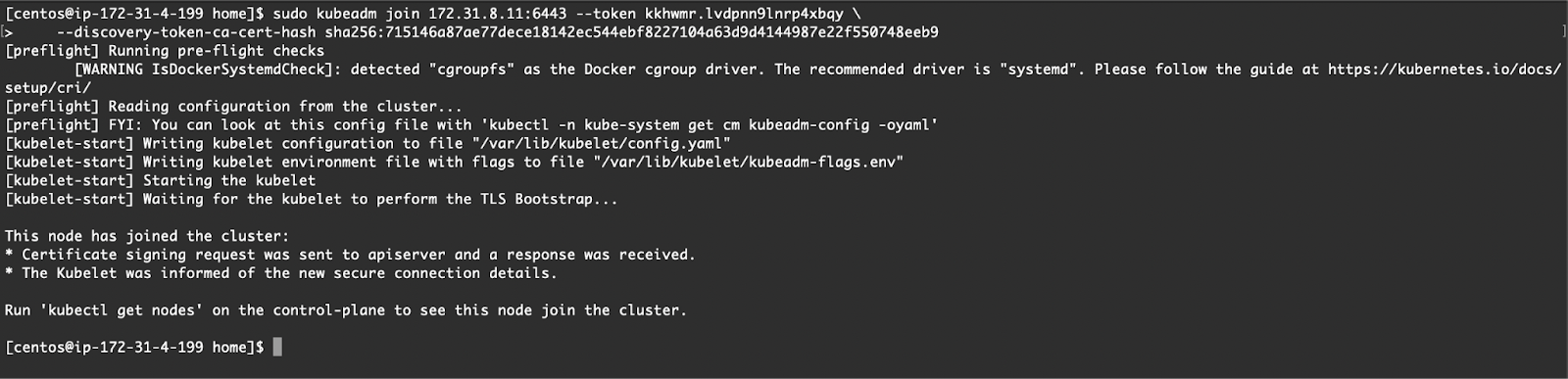

I can now move on with adding a worker node to the cluster. I will use the kubeadm join command from the kubeadm init command output.

When I run the kubectl get nodes command, I can see the other node in Ready state.

As you can see my worker node has now joined the cluster and I can see the pods in the kube-system namespace.

My Kubernetes cluster is installed with EKS Distro and ready for deploying application workloads!

Conclusion

Having a tested, verified and reliable Kubernetes Distribution for production workloads is extremely crucial. This is why EKS is one of the most used and most popular Kubernetes distribution. Being able to run the same Distribution Amazon uses with managed EKS service on any infrastructure and platform is a huge advantage.

If you have some compliance requirements or regulation restrictions and can not use public cloud platforms you can absolutely give EKS Distro a try.

Emin Alemdar

working at kloia as a Cloud and DevOps Consultant. Trying to help everyone with their adoption to DevOps culture, methods, and Cloud journey.