Why Did We Use Terragrunt in a Complex Multi-Account AWS Architecture?

One of our customers reached us to design a cloud-native infrastructure for one of their projects. Since this was a government project, there were many security considerations and strict network requirements, but as Kloia, we always find a way to make the process efficient.

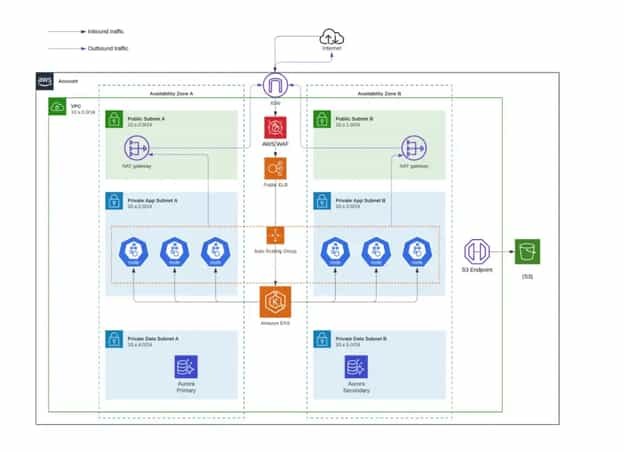

First, we started by planning the infrastructure. Even though the application was a non-cloud compliant java application, customers wanted to run it on Kubernetes to make it future-ready. In order to be compliant with government regulations and make it secure, we decided to split infrastructure across five accounts. AWS recommends account separation based on account functionality. For example, this separation will isolate production workloads from development and test workloads. This separation made this infrastructure more complex. You will see the account details in the “Account Configurations” section.

Also, in this post, you will see how we benefited from Terragrunt to follow IaC methodology with Terraform much easier.

Terragrunt

As Kloia, we always prefer to follow Infrastructure as Code methodology. Terraform helped us to develop the necessary modules. We found it hard to manage this complex infrastructure with plain Terraform DSL based on our previous experiences. One of the main problems we encountered before was repeating the same code in different modules repeatedly. Because on different accounts, we had to deploy the same resources more than once with tiny differences.

We used Terragrunt to solve this issue. Terragrunt is a thin wrapper that provides extra tools for keeping your configurations DRY, working with multiple Terraform modules, and managing remote state. DRY means don’t repeat yourself. That helped a lot with self-repeating modules we had to use in this architecture.

Terragrunt allows you to do the following items with ease:

- Keep your Terraform code DRY:That means you don’t have to write the same code every time for different AWS accounts or different environments like dev, test, QA, and production. For example, the only difference between test and production environments may be the instance sizes or instance counts. You shouldn’t write or copy and paste the same configuration code for that tiny difference. With Terragrunt, you can have only one configuration for all environments and deploy those infrastructures via that code with your preferred configuration changes.

- Keep your remote state configuration DRY: Terraform supports remote backend configuration to store your state files in a remote storage environment. You would usually configure each backend configuration in your .tf files separately. And the backend configurations in Terraform don’t support expressions, variables, or functions. This makes it hard to keep your code DRY. With Terragrunt, you can configure this backend configuration in your root terragrunt.hcl file once and reference it in your .tf files from there.

- Execute Terraform commands on multiple modules at once: For example, if you have more than one Terraform module in subfolders to deploy, you would have to manually run ‘terraform apply’ on each of the subfolders separately. With Terragrunt, you can have a terragrunt.hcl file in each subfolder and on the root level. When you run ‘terragrunt apply-all’ command, Terragrunt will look through all the subfolders that contain a terragrunt.hcl file and run ‘terragrunt apply’ on each of those folders concurrently.

Suppose you have a dependency between two modules, for example.

In that case, if you have a VPC module that needs to be created before the DB module, you can add that dependency with Terragrunt’s input variable into the DB module’s terragrunt.hcl file. By doing this, the DB module would have to wait for the completion of the VPC module.

- Work with multiple AWS accounts: According to AWS Best Practices, the most secure way to manage AWS infrastructure is to use multiple AWS accounts. You would have a central security account and configure all your IAM Roles, Security monitoring, and threat detection services on this account as we did in this project. Other accounts would assume these IAM Roles that you defined on the Security account and perform the other accounts'.

With Terragrunt, you can set the --terragrunt-iam-role argument when you are running terragrunt apply command. Terragrunt will resolve the value and call the sts assume-role API on your behalf and use that role you defined.

Of course, there are more features and use cases for Terragrunt, but these were the key ones that moved us to use Terragrunt.

Account Configurations

- Live account: Contains only production resources.

- NonLive account: Containing only non-production resources (dev, uat, training, etc.)

- Management account: Containing application management resources, build and deployment tooling.

- Logging account: Centralized logging account that is the central hub for VPC Flow logs, S3 Access Logs, Cloudwatch Logs, Kubernetes container logs,

- Security account: Security monitoring and threat detection services.

-2.png?quality=low&width=555&height=529&name=image%20(1)-2.png)

Every applicable AWS resource was encrypted using the customer's own KMS Key generated using Cloud HSM. AWS CloudHSM is a cloud-based hardware security module (HSM) that enables you to easily generate and use your encryption keys on the AWS Cloud.

The Management Account connected the networks of all accounts with Transit Gateway. AWS Transit Gateway is a service that enables customers to connect their Amazon Virtual Private Clouds (VPCs) and their on-premises networks to a single gateway. As you grow the number of workloads running on AWS, you need to scale your networks across multiple accounts and Amazon VPCs to keep up with the growth. This process enabled us to encrypt the network automatically between accounts. The network is strictly prohibited from using Network ACLs.

We chose Amazon EKS as the container orchestration tool. Amazon Elastic Kubernetes Service (Amazon EKS) is a managed service that you can use to run Kubernetes on AWS without needing to install, operate, and maintain your Kubernetes control plane or nodes. Amazon EKS runs Kubernetes control plane instances across multiple Availability Zones to ensure high availability. It automatically detects and replaces unhealthy control plane instances, and it provides automated version updates and patches for them.

AWS Config rules are managed in the security account. AWS Config provides a detailed view of the configuration of AWS resources in your AWS account. This view includes how the resources are related to each other. It also shows how resources are configured to see how the configurations and relationships change over time.

We decided to use Nginx Ingress Controller to expose containers to the internet. All internal pod-to-pod ( east-west ) communications encrypted with AWS App Mesh. AWS App Mesh is a service mesh that makes it easy to monitor and control services. App Mesh standardizes how your services communicate, giving you end-to-end visibility and ensuring your applications' high availability.

For scanning S3 Buckets, we used Amazon Macie. It is a fully managed data security and data privacy service that uses machine learning and pattern matching to discover and protect your sensitive data in AWS.

For container runtime scanning in EKS Clusters, we deployed Sysdig Falco.

We preferred Fluentbit for shipping container logs to their respective Cloudwatch log groups in the Log account. All IAM permissions were handled by using IAM roles Service Accounts for EKS clusters.

To provide monitoring for all Kubernetes Clusters, we installed Prometheus and Grafana in all clusters.

Infrastructure as Code

To create the whole infrastructure, we developed the following modules with Terraform to deploy to the accounts:

- Amazon Aurora

- AWS Config

- Cloudwatch Alarms

- Amazon ECR

- Amazon EKS

- Amazon GuardDuty

- IAM OpenID Connect (OIDC) Identity Providers

- Amazon Cloudwatch Log Groups

- AWS Secrets Manager

- AWS Security Hub

- WAF

- WAF IP Sets

- k8s-aws-ebs-csi-driver

- k8s-aws-load-balancer-controller

- k8s-calico

- k8s-elasticsearch

- k8s-customer-app

- k8s-falco

- k8s-fluentbit

- k8s-prometheus-exporter

k8s prefixed modules are EKS components installed in the cluster.

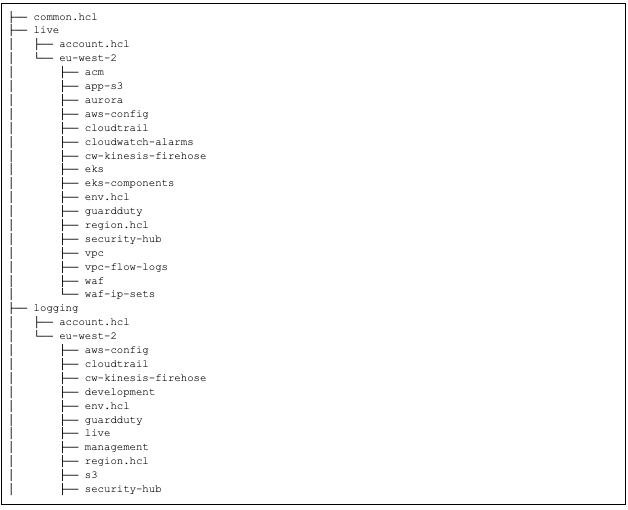

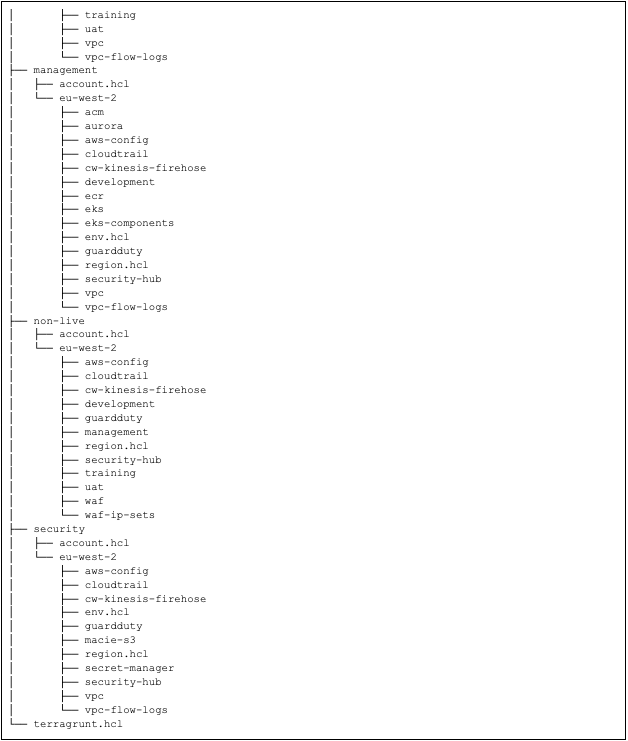

Terragrunt can define your modules or whole stack and then deploy your stack to different environments with ease. Below is the complete folder structure for the Terragrunt repository:

As you can see from the folder structure, most of these services are configured in every account with tiny differences. For example, AWS Config, Amazon GuardDuty, AWS CloudTrail are present in every account in this environment. Without Terragrunt, we would have to manually configure each of the services separately and run terraform apply manually on each account’s subfolder.

You would typically add your provider configuration in each module’s .tf files. Still, with Terragrunt, you can configure this information on the root level once and reference these in your module’s .hcl file easily, like the example below.

locals {

# Automatically load environment-level variables

environment_vars = read_terragrunt_config(find_in_parent_folders("env.hcl"))

Account_vars = read_terragrunt_config(find_in_parent_folders("account.hcl"))

# Automatically load region-level variables

region_vars = read_terragrunt_config(find_in_parent_folders("region.hcl"))

common_vars = read_terragrunt_config(find_in_parent_folders("common.hcl"))

# Extract out common variables for reuse

env = local.environment_vars.locals

aws_region = local.region_vars.locals.aws_region

}

If you have dependencies between your modules, for example, if some resources need to wait for the VPC module’s completion, you would typically add a data block to your .tf file. Still, with Terragrunt, you can just reference it like the example below.

dependency "vpc" {

config_path = "../vpc"

}

If someone wants to deploy every component to every account, they just need to run a terragrunt apply command and watch the environment creation. For example, if only the logging account needs to be deployed, they need to change the directory to the logging and apply Terragrunt there;

$ cd logging/eu-west-2 && terragrunt applyThis results in an easily defined and self-documented infrastructure repository.

Conclusion

This blog post shows how we can follow Infrastructure as Code methodology with Terragrunt and how Terragrunt works.

With Terragrunt, you can define Terraform Modules to reuse standard components such as credentials, logging, SSL certificates, or common patterns for managing the infrastructure lifecycle. Terraform modules let you automate the creation of environments within AWS, and Terragrunt makes it easy to test and define these modules in code. Keep your infrastructure clean and Terraform modules DRY. Terraform modules should be independent, reusable, and simple to develop and modify. Terragrunt provides a DSL architecture to build terraform modules that are easily reused and highly testable. Keep your terraform modules smelling fresh by writing tests that validate both the input data and the output data.

We have benefited from Terragrunt because it saved us a lot of time and effort. At the same time, it made our code much simpler and more readable.

If you have more than one environment and spend a lot of time writing too many .tf files with nearly the same configurations, you should check out Terragrunt.

Emin Alemdar

working at kloia as a Cloud and DevOps Consultant. Trying to help everyone with their adoption to DevOps culture, methods, and Cloud journey.