Why do we use CNI Plugins on Kubernetes?

Kubernetes networking model does not have a single model. This approach makes the Kubernetes vendor/provider independent. In this way, you can implement your custom networking model via CNI plugins.

Kubernetes, by default, is using the Kube-Net plugin to handle coming requests. Kube-Net is a very basic plugin that doesn't have many features. If a developer needs more features like isolation between namespaces, IP filtering, traffic mirroring or changing load balancing algorithms then other network plugins should be used.

CNI plugins should allocate the virtual interface from the host instance where pods are running and then bind this virtual-interface to themselves.

CNI plugins work on different OSI Network Layers to cover their use-cases. Many of them work on Layer 2, Layer 3 and Layer 4. If a CNI works on Layer 2, they are generally faster but don’t have as many features as Layer 3 or Layer 4 based CNIs.

Here are the alternatives that we think are worth mentioning:

Calico:

The calico project part of Tigera, is a proprietary, open-source project. Calico provides network and network security solutions for containers. Calico is best known for its performance, flexibility and power.

Use-cases:

Calico can be used within a lot of Kubernetes platforms (kops, Kubespray, docker enterprise, etc.) to block or allow traffic between pods, namespaces. Also, Calico supports an extended set of network policy capabilities and these policies can be integrated into istio.

Pros / Cons

|

Pros |

Cons |

| IPVS support | No encryption |

| Configurable MTU | No multicast support |

| Giving CIDR for the Pod |

|

| IPv6 support |

|

| Defending against DoS Attack |

|

| Service mesh is possible with Istio |

|

| Default choice for most Kubernetes based platforms |

|

| Plans to add BPF in the near future |

|

AWS-CNI:

Default AWS EKS networking plugin is an open-source project that is maintained on GitHub. It uses AWS Elastic Network Interface to connect each pod to VPC. So pods have a direct connection inside VPC without the extra hop.

You can see AWS EC2 machine types and network performance in this spreadsheet.

Use-cases:

If you are working on latency-sensitive applications, the AWS-CNI plugin allows accepting traffic directly to your pods.

AWS-CNI plugin creates a virtual network interface on the host instance and creates a network route directly to the pods. This property can help decrease the network latency.

Pros/Cons

|

Pros |

Cons |

| Direct traffic via ENI. | AWS is limiting the secondary IP according to the ENI so if you are using AWS-CNI VPC plugin, you cannot schedule a pod which instance has exceeded the limit of ENI. |

| The ability to enforce network policy decisions at Kubernetes layer if you install Calico. | Calico is using podCidrBlock value and assigning IP addresses to the pods without any limits rather than awsvpc-cni plugin. |

| Best and fastest CNI when running Kubernetes on AWS. Allocates ENI's to each pod so all standard AWS networking can be used for routing. Note: You should still use Calico for network policy. |

Weave Net:

Weave Net is a Weaworks project, it originally developed for docker workloads and then evolved into Mesosphere and Kubernetes network plugins. It has a straight-forward installation and configuration. Weave Net uses kernel’s Open vSwitch datapath module for pod-to-pod communication without going to the userspace, which is known to be slow.

Use-cases:

Weave Net creates an OSI Layer 2 network and operates on kernel space. Only Linux is supported because of how Weave Net operates. It also supports Network Policies but creates extra DeamonSet to manage them. Finally, Weave Net supports easily configurable out-of-box encryption at CNI level but this comes with a hefty network speed reduction.

Pros/Cons

|

Pros |

Cons |

| Kernel level communication, also uses VXLAN encapsulation between hosts which enables monitoring. | Linux Only. |

| Layer 2, fast. | NetworkPolicy requires an extra DeamonSet. |

| Supports encryption. |

|

| Supports NetworkPolicy. |

|

Cilium:

Cilium is an open-source project developed by the Linux kernel developers for securing network connectivity between services on Linux-based container platforms. Cilium uses BPF technology of Linux kernel. BPF enables the dynamic insertion of powerful security visibility at the cilium.

Use-cases:

Cilium can be used with other CNIs (AWS-CNI or Calico, etc.). In these hybrid modes, it can provide multi-CNI capabilities. Cilium provides enabling pod-to-pod connectivity across all clusters with the ability of cluster-mesh. Multiple Kubernetes clusters can be connected using Cilium, and clusters can access each other using internal DNS.

Pros/Cons

|

Pros |

Cons |

| Cluster mesh support. | Built-in provisioner Support |

| Layer 7/HTTP awareness. | A More complicated setup compared to other CNI plugins |

| Supports encryption (beta). |

|

| Supports AWS CNI, Calico plugin. |

|

| Has better performance because it uses BPF. |

|

Flannel:

Flannel is an open-source project created by CoreOS, and it has a straight-forward configuration and management. Project has currently only one maintainer and focuses only on critical fixes, bugs. Not recommended.

Use-cases:

If you are using connectivity between two hosts on Layer 2 without any network tunneling then this CNI is one of the solutions.

Other specific use-case CNIs:

Istio CNI: Istio CNI comes with the istio service mesh (optional). Normally istio creates initContainers with “NET_ADMIN” capability on meshed workloads; istio CNI removes this requirement which is a more secure way to do it.

AKS CNI: AKS CNI comes with AKS clusters. AKS CNI creates IPs for pods from subnets. This has the same limitations as AWS CNI which is the total allocatable IP range can be limited. This problem also imposes 30 pod per node default limit which can be increased up to 250 per node (not recommended).

Benchmark Results of CNI Plugins

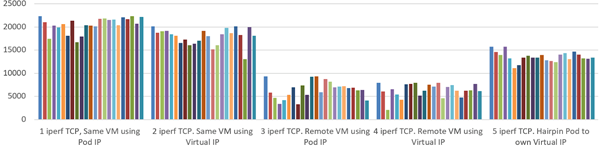

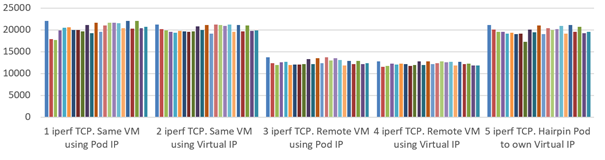

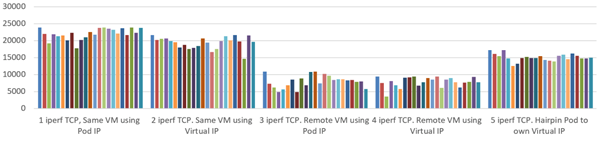

We performed a Kubernetes performance test using the official Kubernetes network performance tool.

You can access the tool from here: NetPerf

AWS CNI

Calico

Weave

Conclusion

Each CNI plugin contains a couple of skills for itself. For the more advanced security and network policy intensive workloads, you can use Calico .

If you use Cloud Providers’ own managed Kubernetes cluster service, you can use their CNI implementation. It’s advantageous to get support and use tested CNIs for the environment. But if you have Hybrid Cloud Setups and some on-premise infrastructure, then you can use calico because it works everywhere.

If you value performance above all, then Weave is a great choice. Their tech and community are great. You won’t get as many issues with it but be sure that your hardware and kernel supports Weave-net’s requirements.

Image reference for diagram: https://neuvector.com/network-security/advanced-kubernetes-networking/

Akın Özer

DevOps and Cloud Consultant @kloia